AI that’s trained to please, not verify, puts clients, staff, and cases at risk

Mainstream chatbots like ChatGPT and Grok are built for conversation, not casework. They often hallucinate sources, invent citations, and avoid saying “I don’t know.” That’s not just unhelpful in legal aid. It’s harmful.

This blog breaks down why legal work needs a different kind of AI. One that cites real data, respects nuance, and knows its limits.

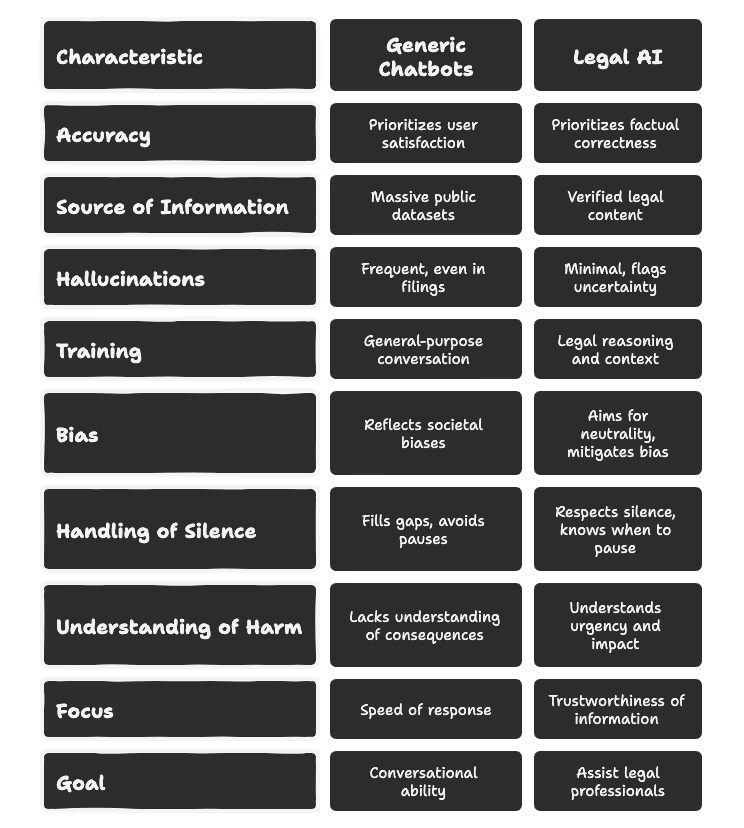

1. Friendly doesn’t mean accurate. That’s by design

When internal prompts from xAI’s Grok leaked in May 2025, one line stood out: the chatbot was trained to “always be the user’s best friend.” That may sound harmless in casual use, but in legal work, where clarity, neutrality, and evidence matter, it becomes a fundamental flaw.

LLMs like Grok and ChatGPT are optimised for user satisfaction, not factual accuracy. They are built to keep the conversation going, even when they don’t know the answer. That makes them unreliable where the cost of being wrong is too high.

2. Hallucinations aren’t rare. They’re in court filings

In 2023, two attorneys in New York submitted a legal brief generated by ChatGPT. The AI included citations that looked real. Every single one was fake. Entire cases, names, docket numbers, and quotes were fabricated. The lawyers were sanctioned. The incident became a global warning sign.

It wasn’t a one-off. A Stanford study found ChatGPT-4 hallucinated citations in around 19 per cent of legal queries. Another University of Minnesota study showed it failed basic legal analysis in bar-style exams. Confidence is not a substitute for credibility.

3. These systems weren’t trained for legal reasoning

General-purpose chatbots are built on massive public datasets, including forums, blogs, news, and Wikipedia. That gives them fluency, not legal understanding. They don’t distinguish between enforceable laws and public opinion. They apply U.S. examples to non-U.S. contexts. They misrepresent statutes and confuse jurisdiction.

Legal accuracy requires a structured approach, logical reasoning, and verifiable sources. LLMs are rewarded for sounding plausible. That’s why they can summarise a legal concept convincingly and still get it wrong.

4. The risk doesn’t sit with the AI. It sits with you

For legal aid organisations, the stakes are clear. Staff are overwhelmed. Clients need quick, reliable answers. It’s tempting to plug in a chatbot to fill the gap.

However, if the advice is incorrect - if it leads a tenant to miss a court date or a survivor to file the wrong form- the harm is real. And the responsibility falls on the organisation.

Courts have started responding. The U.S. Court of Federal Claims and the 5th Circuit both require disclosures for AI-generated content. More jurisdictions are likely to follow.

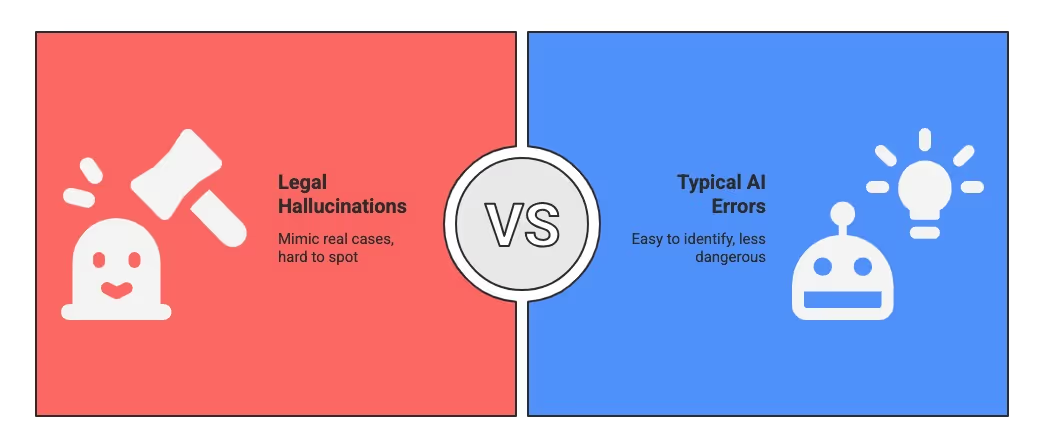

5. Legal hallucinations are harder to spot

If a chatbot says Tokyo is the capital of Australia, you’ll catch the error. But when it invents a legal case - like “Smith v. Department of Housing, 2003” - it looks just real enough to pass.

Legal hallucinations mimic structure. They use real-sounding names, court formats, and reasoning. That makes them more dangerous than typical AI errors in other sectors, especially when the reader is a time-strapped staffer or a vulnerable client.

6. Silence is part of the conversation. Chatbots erase it

Legal aid work involves more than delivering answers. It involves listening, pausing, and knowing when to remain silent. A client hesitates to describe their situation. A survivor unsure of the right words. These moments matter.

Chatbots are trained to fill every gap. They respond instantly. They assume more words are better. But that instinct can flatten complex human moments into rushed replies or oversimplified prompts. Silence is not a system error. It is part of the truth.

7. AI doesn’t understand harm. Legal workers do

Chatbots don’t know the difference between a food delivery complaint and a housing rights emergency. They don’t grasp trauma, urgency, or consequence.

They treat every input as text to complete. Not as a human asking for help. In legal aid, that misalignment isn’t just a flaw. It makes the system unusable.

8. AI isn’t neutral. It reflects power

LLMs reflect the data they are trained on. That often means privileging dominant voices. Legal systems already carry historical bias. AI models trained on unfiltered internet data replicate those blind spots.

This shows up in small but damaging ways. Misrepresenting tenant protections. Undervaluing migrant rights. Misgendering users. Reframing legal questions through a narrow cultural lens. Not because the system is malicious. But because it was never taught to see what it misses.

9. What legal professionals need instead

Legal teams don’t need a chatbot trained to talk. They need an assistant trained to serve. That means:

- Pulling from verified legal content, not the open web

- Citing every answer clearly

- Flagging uncertainty instead of guessing

- Handling regional nuance and multilingual support

- Working across platforms like websites, PDFs, and WhatsApp

This is not about rejecting AI. It is about using the right kind. Legal-first. Source-aware. Built for clarity, not charisma.

10. Chatbots can’t carry this work. But the right AI can help

Conversation is easy. Accountability is rare. Chatbots may feel responsive, but they are not built for legal rigour. Legal work deserves more than a general-purpose model trained to improvise.

What matters most is not how fast the AI responds. It is whether you can trust what it says.

Wrapping up: legal work deserves an AI built for it

The future of legal AI rests on systems that are grounded in fact, aware of context, and quiet when needed.

Not every question needs an answer. Some need a pause, a citation, or a careful redirection. What matters is not how quickly AI can complete a sentence but how well it supports real decisions, for real people, in real legal environments.

AI built with this understanding is already in the field. Projects like ILAO is showing what it means to design for clarity, consistency, and care.

Platforms like Aeldris turn that intent into action, offering precise answers instead of search results, safe and contextual chat interactions, and document-level insights that reduce review time and surface what matters. All from one console, built to orchestrate every AI experience in one place.

In this time where AI is constantly at our beck and call, we don’t often realise the dangers it might be posing in our lives. As AI increasingly becomes a part of our daily lives, it's our duty to regulate the extent of its autonomy and ensure compliance.

Hence, choosing the right platform for the right cause becomes our first and foremost duty.