Voice Activity Detection (VAD) is the backbone of modern speech systems. In Text-to-Speech (TTS) pipelines, it trims silence, reduces compute, and improves conversational flow. Without VAD, assistants lag, call centres sound robotic, and accessibility tools stumble.

Open-source stacks like Coqui TTS or ESPnet let you plug in Silero VAD or WebRTC VAD. Tuning them for latency versus accuracy is often a challenging task. Proprietary APIs such as Amazon Polly and Google Cloud TTS handle scale but usually rely on client-side VAD to cut bandwidth and cost.

The trade-off is clear. Cut too aggressively and you clip words. Cut too conservatively and you add delay. Real-world applications show the balance. Call centres get 95% accuracy in noisy environments. Hospitals cut documentation time by 40% using Silero VAD. Banks achieve 99.5% accuracy for voice transactions.. Healthcare dictation apps segment audio with Silero before streaming to Azure for playback.

VAD may seem small, but it is the switch that makes speech systems sound natural, fast, and reliable.

VAD works at three stages: breaking up long text input, saving compute during synthesis, and cleaning up the final audio output.

The TTS landscape: a technical overview

The current TTS ecosystem spans from highly specialised open-source frameworks to sophisticated proprietary models, each with distinct capabilities and limitations.

.png)

FunAudioLLM represents a paradigm shift toward unified audio-language architectures. This multi-modal fusion approach enables simultaneous handling of TTS, Automatic Speech Recognition (ASR), and speech understanding within a single computational framework.

The model's strength lies in its emotional context awareness and multi-language synthesis capabilities, achieved through advanced transformer architectures that maintain contextual coherence across different modalities.

However, the unified architecture introduces computational overhead that can impact real-time performance requirements.

SpeechCueLLM focuses on prosodic modelling and contextual speech synthesis. Its architecture emphasises attention mechanisms that capture subtle linguistic cues, enabling nuanced control over emphasis, rhythm, and emotional colouring.

The model's strength in conversational AI applications stems from its ability to maintain discourse-level coherence while adapting prosodic features based on textual context.

This sophisticated processing, while producing high-quality output, introduces latency challenges in real-time applications.

Coqui TTS stands out as the most architecturally diverse framework, supporting multiple synthesis approaches, including autoregressive models like Tacotron, non-autoregressive architectures such as FastSpeech, and flow-based models like Glow-TTS. The VITS (Variational Inference with adversarial learning for end-to-end Text-to-Speech) integration provides particularly impressive audio quality through its adversarial training approach.

The framework's modular design allows for fine-tuned optimisation but requires careful architectural selection based on specific use cases.

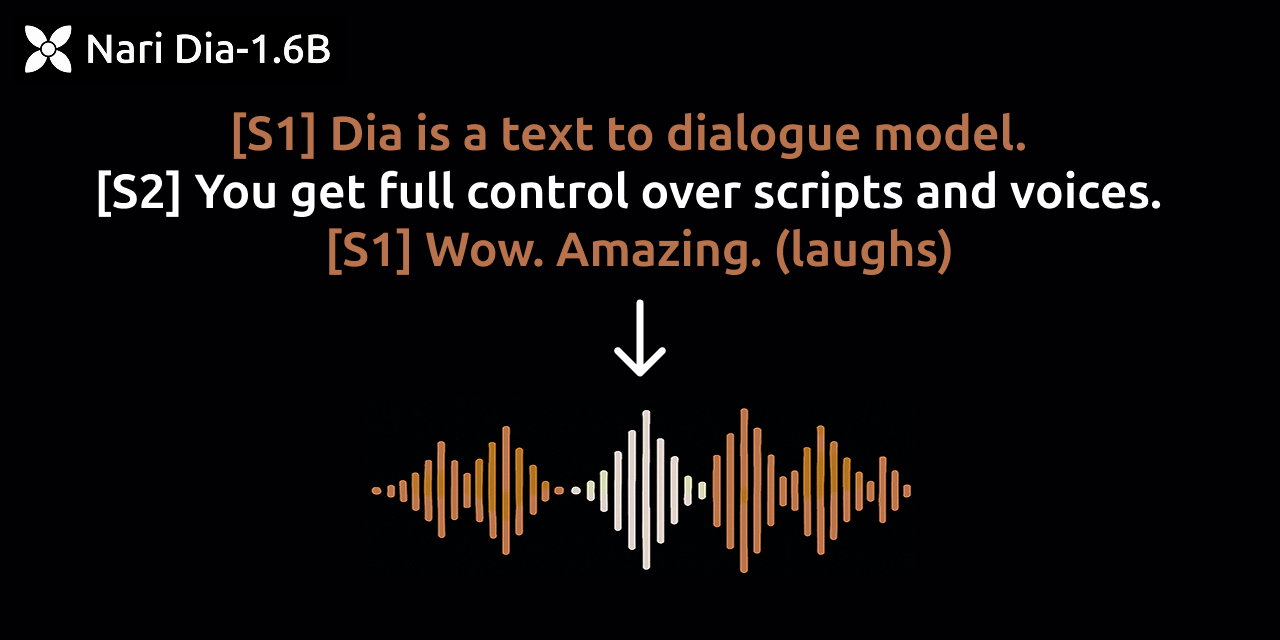

Dia specialises in dialogue-centric synthesis with particular emphasis on paralinguistic features. The model incorporates explicit modelling of non-verbal vocalisations, breath patterns, and character-specific voice characteristics. This specialisation makes it invaluable for interactive applications but introduces complexity in terms of computational requirements and model size.

Qwen-Omni leverages Alibaba's multi-modal architecture to provide contextually aware speech synthesis. The model's ability to process cross-modal information enables sophisticated context-dependent prosodic adjustments.

However, this multi-modal processing capability comes with increased computational overhead and memory requirements.

SALMONN from Shanghai AI Lab represents an interesting approach to multi-modal foundation models with integrated TTS capabilities. The model's architecture combines audio, text, and visual processing streams, enabling rich contextual understanding for speech generation.

While promising for research applications, the multi-modal architecture introduces latency concerns for real-time deployment.

OpenAI TTS sets the benchmark for proprietary TTS systems with its streaming synthesis capabilities and exceptional emotion fidelity. The model's architecture appears optimised for real-time performance while maintaining high-quality output.

The streaming approach enables progressive audio generation, significantly reducing perceived latency compared to batch processing models.

Gemini TTS from Google DeepMind integrates sophisticated multi-modal reasoning capabilities with speech synthesis.

The model's strength lies in its ability to maintain natural conversational pacing while adapting to context. The integration with Google's infrastructure provides optimised performance characteristics that are difficult to replicate in open-source implementations.

.png)

LLaMA-Omni represents Meta's experimental approach to unified language and audio processing.

The shared representation learning approach shows promise for creating more efficient multimodal models, though the current implementations remain primarily research-focused.

VAD implementation challenges

The integration of Voice Activity Detection with these diverse TTS architectures reveals several fundamental challenges.

Traditional VAD approaches rely on acoustic features extracted from short-term analysis windows, but the characteristics of TTS-generated audio can differ significantly from natural speech patterns.

Energy-based detection framework

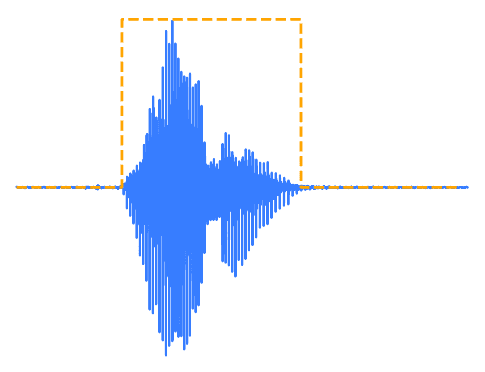

The core VAD implementation centres on short-term energy analysis with adaptive thresholding. The approach involves segmenting audio streams into overlapping analysis frames, typically 20-30 milliseconds in duration with 10-15 millisecond hop sizes. This granular analysis enables the detection of rapid voice onset and offset events while maintaining computational efficiency.

The Mean Square Energy calculation provides a robust foundation for voice detection, but the challenge lies in establishing appropriate threshold values that adapt to varying acoustic conditions. The noise floor estimation becomes particularly critical when dealing with TTS-generated audio, as the spectral characteristics may differ from natural speech patterns.

Dynamic threshold adaptation proves essential for maintaining detection accuracy across diverse audio conditions. The scaling factor selection requires careful calibration based on the specific TTS model characteristics and expected deployment environments.

Post-processing and temporal smoothing

Raw energy-based detection often produces fragmented results that require sophisticated post-processing. Temporal smoothing algorithms help eliminate spurious detections while preserving legitimate voice activity boundaries. Median filtering approaches provide effective noise reduction while maintaining temporal resolution.

The challenge of minimum voice segment duration becomes apparent when dealing with TTS models that generate audio with varying prosodic characteristics. Some models produce more natural pauses and breathing patterns, while others generate more uniform energy distributions that can confuse traditional VAD algorithms.

Edge vs Cloud Choice

On-device: 20ms speed, private, works offline. Limited power.

Cloud: Advanced models, always updated. 50-200ms network delay.

Hybrid: Phone does basic filtering, cloud does complex processing. 180ms total vs 400ms cloud-only.

Real-time performance optimisation

The performance characteristics of open-source TTS models present significant challenges for real-time applications. Latency accumulation occurs at multiple stages: text processing, acoustic feature generation, vocoder synthesis, and final audio rendering. Each stage contributes to the overall system delay, making real-time conversational applications challenging.

Resource utilisation patterns vary dramatically across different TTS architectures. Autoregressive models like Tacotron require sequential processing that inherently limits parallelisation opportunities. Non-autoregressive models offer better parallelisation potential but often require larger model sizes to maintain quality.

Memory bandwidth becomes a critical bottleneck when processing continuous audio streams. The combination of TTS generation and concurrent VAD processing can strain system resources, particularly when maintaining multiple parallel processing streams.

WebRTC integration and network optimisation

The implementation of WebRTC-based real-time communication requires careful consideration of network latency and packet loss characteristics. The FastRTC library provides optimised WebSocket implementations that reduce protocol overhead compared to standard WebRTC implementations.

WebSocket client optimisation involves implementing adaptive buffering strategies that balance latency requirements with network stability. The challenge lies in maintaining consistent audio quality while accommodating varying network conditions and processing delays

Background noise causes false triggers. Multiple speakers confuse detection. Fake voice attacks bypass systems. Airport systems maintain 94% accuracy in 70dB noise.

Vector database integration for contextual TTS

The integration of vector databases like ChromaDB introduces additional complexity to the real-time processing pipeline. The challenge involves maintaining rapid document retrieval while ensuring contextual relevance for TTS generation.

Embedding generation and similarity search must operate within strict latency constraints to maintain conversational flow. The chunking strategy for the document corpus affects both retrieval accuracy and system performance. Optimal chunk sizes balance contextual coherence with retrieval speed.

Incoming voice queries undergo immediate transcription through the VAD-integrated pipeline, with real-time speech-to-text conversion feeding directly into the vector search system. Query preprocessing includes entity recognition, keyword extraction, and semantic expansion to improve retrieval accuracy.

The query embedding generation occurs in parallel with VAD processing, enabling simultaneous voice activity detection and contextual preparation. This parallelisation reduces overall system latency by eliminating sequential processing bottlenecks.

- Stage 1: Broad Semantic Matching - Initial vector similarity search returns top-k candidates from the entire corpus, typically retrieving 50-100 potential matches based on cosine similarity scores.

- Stage 2: Contextual Refinement - Secondary filtering applies conversation history awareness, user preference weighting, and temporal relevance scoring to narrow the candidate set to 10-15 highly relevant chunks.

- Stage 3: Coherence Optimisation - Final selection considers chunk adjacency, topic continuity, and response length constraints to identify 3-5 optimal chunks for TTS integration.

Parallel processing architecture

The system implements asynchronous processing patterns that enable concurrent execution of VAD, transcription, vector search, and TTS preparation. Thread pooling and connection pooling minimise resource initialisation overhead while maintaining system responsiveness.

Pipeline batching combines multiple retrieval operations where possible, reducing per-query overhead while maintaining individual response quality.

Users notice delays over 250ms. Aggressive VAD clips words, frustrating users. A/B tests show neural VAD gets 12% higher satisfaction, 8% better task completion.

Conclusion

A well-designed Voice Activity Detection layer is what separates experimental speech systems from those ready for real-world use. As TTS architectures evolve from focused synthesis engines to multi-modal foundation models, the role of VAD only grows more critical. It is no longer about detecting silence alone, but about aligning detection methods with the unique acoustic and computational profiles of each model.

The trade-offs are clear. Performance gains in one area can create new bottlenecks in another, whether in network stability, model latency, or memory bandwidth. The most effective implementations balance these competing forces by treating VAD as an integrated component of the pipeline rather than a simple preprocessor.

For developers and researchers, this means thinking of VAD not as an accessory but as a control mechanism that ensures speech systems respond in real time, preserve conversational flow, and deliver natural output at scale. In practice, the success of modern TTS deployments will depend as much on how VAD is engineered as on the synthesis models themselves.

.webp)