Parakeet-TDT-0.6B-v2 is a 600-million-parameter automatic speech recognition model designed for high-quality English transcription. Despite its relatively modest size compared to multi-billion parameter alternatives, this model delivers exceptional performance across a wide range of benchmarks.

The name “Parakeet” represents NVIDIA’s family of ASR models, while “TDT” refers to the innovative Token-and-Duration Transducer architecture that powers it. The “0.6B” indicates its parameter count (600 million), and “v2” signifies this is an improved second version of the model.

Key features of Parakeet-TDT-0.6B-v2 include:

- Accurate word-level timestamp predictions

- Automatic punctuation and capitalisation

- Robust performance on spoken numbers and song lyrics transcription

- Support for processing audio segments up to 24 minutes in a single pass

- Impressive processing speed, transcribing up to 60 minutes of audio in just one second under optimal conditions

Key features and capabilities

Parakeet-TDT-0.6B-V2 is a 600-million-parameter ASR model designed specifically for high-quality English transcription. The model offers several standout features:

- Accurate word-level timestamp predictions: Precise timing information for each word in the transcript

- Automatic punctuation and capitalisation: Naturally formatted text output without additional post-processing

- Long-form audio processing: Efficient transcription of audio segments up to 24 minutes in a single pass

- Impressive processing speed: Achieves an RTF (Real-Time Factor) of 3380 on the HF-Open-ASR leaderboard with a batch size of 128

- Robust performance on challenging content: Handles spoken numbers and song lyrics with high accuracy

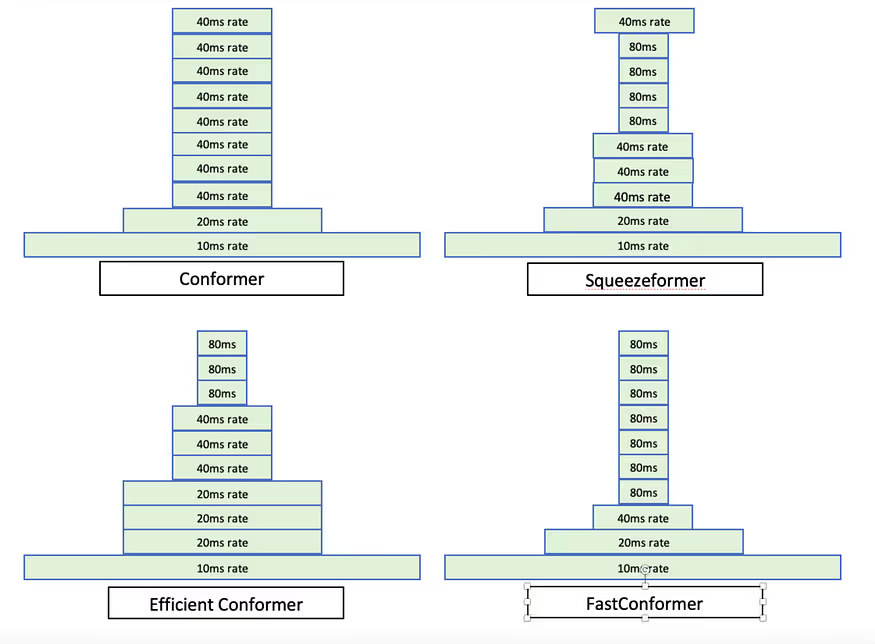

The model is based on NVIDIA’s FastConformer architecture with the Token Duration Transducer (TDT) decoder, combining cutting-edge design elements to achieve state-of-the-art results.

Model architecture: FastConformer meets TDT

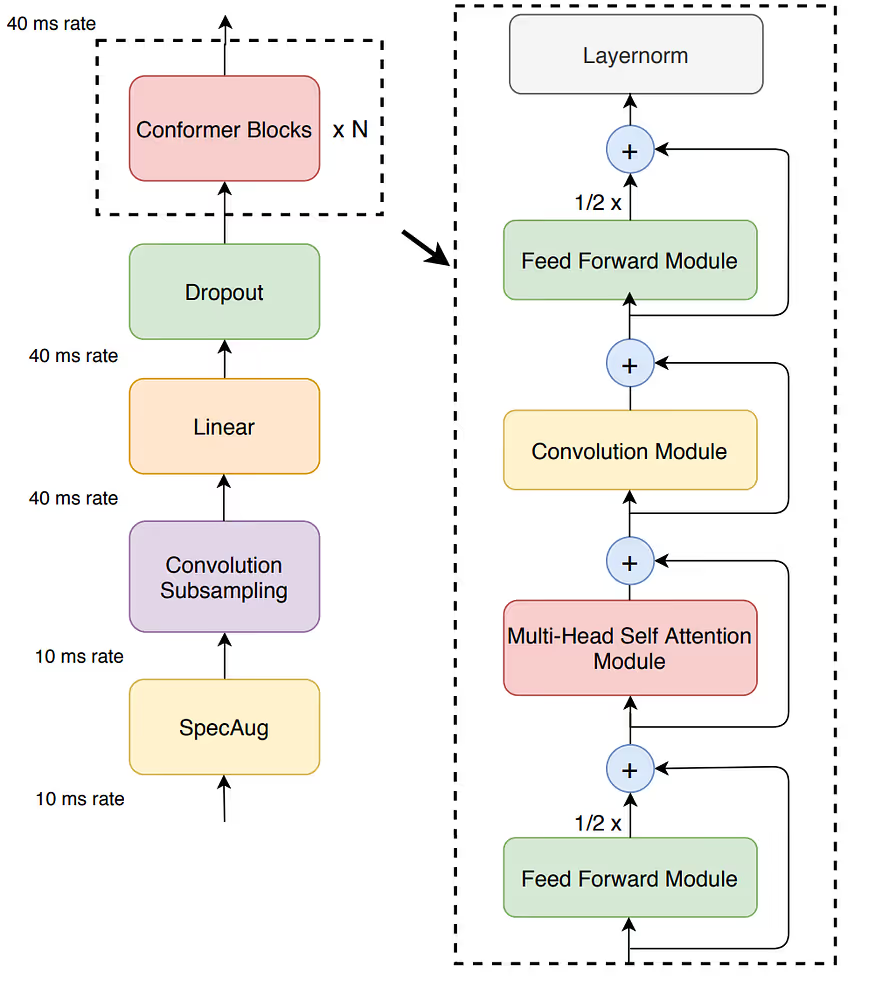

FastConformer: Optimised encoder architecture

The Parakeet-TDT-0.6B-v2 model is built on the FastConformer encoder architecture, a highly optimised version of the standard Conformer model that has dominated speech recognition tasks in recent years. FastConformer introduces several key innovations that significantly enhance performance:

- Enhanced downsampling: FastConformer implements an 8x depthwise convolutional subsampling with 256 channels, which efficiently reduces the input sequence length early in the model pipeline, thereby decreasing the computational load for subsequent layers.

- Depthwise separable convolutions: Instead of using standard convolutions, FastConformer utilises depthwise separable convolutions, which factorise the convolution operation into two separate steps - a depthwise convolution followed by a pointwise convolution. This reduces both the parameter count and computational complexity.

- Channel reduction: The architecture employs a reduced channel count in the downsampling module (256 channels), which helps minimize the model’s parameter footprint without significantly impacting performance.

- Reduced kernel size: FastConformer uses a convolutional kernel size of 9 (down from 31 in the original Conformer), which maintains accuracy while decreasing computation time.

These modifications result in an encoder that is approximately 2.4–2.8 times faster than the regular Conformer encoder without significant quality degradation. Additionally, FastConformer supports efficient processing of long audio sequences through a linearly scalable attention mechanism inspired by the Longformer approach, which combines local attention with global tokens to maintain performance while reducing computational overhead.

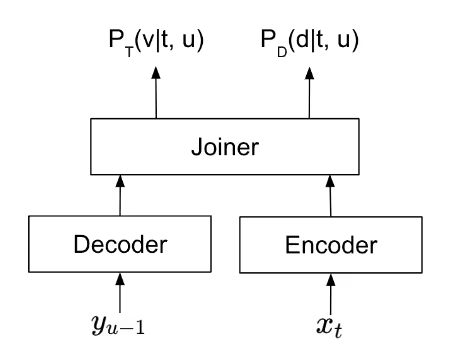

Token Duration Transducer (TDT): The secret weapon

A key innovation in the Parakeet-TDT-0.6B-V2 model is the Token Duration Transducer (TDT) decoder, which extends conventional RNN-Transducer architectures by jointly predicting:

- The token to be emitted

- The duration of that token (number of input frames it covers)

This dual prediction system uses a joint network with two independently normalised outputs that generate distributions for tokens and their durations. During inference, the TDT can skip input frames based on predicted durations, making it significantly faster than conventional Transducers that process encoder output frame by frame.

The TDT approach offers several critical advantages:

- Faster inference: By skipping frames rather than processing each one individually

- Accurate timestamps: By inherently tracking the duration of each token

- Efficient processing: By reducing computational overhead in the decoding phase

Performance benchmarks: setting new standards

The Parakeet-TDT-0.6B-V2 model demonstrates impressive performance across a variety of benchmarks, making it a top contender in the ASR space.

Word Error Rate (WER) performance

The model achieves remarkable accuracy across multiple datasets, as shown in the following table:

Dataset-specific WER scores include:

- AMI: 11.16%

- Earnings-22: 6.52%

- GigaSpeech test: 8.08%

- LibriSpeech test-clean: 1.69%

- LibriSpeech test-other: 3.70%

Noise robustness

Parakeet-TDT-0.6B-v2 maintains strong performance even in noisy environments:

- Clean audio: 6.05% WER

- SNR 50: 6.04% WER (relative +0.25%)

- SNR 25: 6.50% WER

- SNR 5: 8.39% WER

This robust performance across varying noise levels makes the model suitable for real-world applications where perfect acoustic conditions cannot be guaranteed.

Processing speed

One of the most remarkable aspects of Parakeet-TDT-0.6B-v2 is its processing speed. The model demonstrates an impressive real-time factor (RTF) of 3380 with a batch size of 128, meaning it can transcribe approximately 56 minutes of audio in just one second under optimal conditions.

Even with smaller batch sizes or on less powerful hardware, the model maintains strong performance, making it suitable for both real-time applications and batch processing scenarios.

Comparison with competitor models

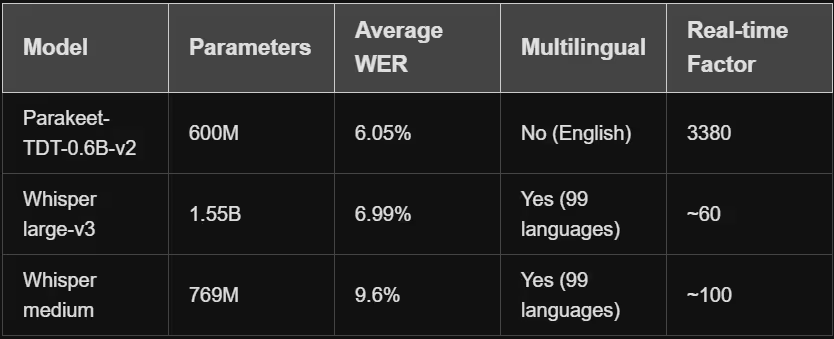

When comparing Parakeet-TDT-0.6B-V2 with other state-of-the-art ASR models, several key advantages become apparent:

vs. OpenAI’s Whisper model

- Parameter efficiency: At just 0.6B parameters, Parakeet-TDT-0.6B-V2 is significantly smaller than Whisper Large V3 (1.55B parameters), making it more resource-efficient.

- Inference speed: Parakeet-TDT-0.6B-V2 processes audio significantly faster than Whisper models, with an RTF of 3380 compared to Whisper’s lower throughput.

- Timestamp accuracy: While both models support timestamps, the TDT architecture’s native duration prediction offers more precise word-level timestamps.

- Long-form audio: Parakeet can handle up to 24 minutes of audio in a single pass, whereas Whisper models typically process shorter segments.

vs. Meta’s MMS and wav2vec 2.0

- End-to-end capabilities: Unlike wav2vec 2.0, which requires additional components for full ASR, Parakeet is an end-to-end solution.

- Punctuation and capitalisation: Parakeet natively includes punctuation and capitalisation, which many other models require post-processing to achieve.

- Optimised architecture: The FastConformer-TDT combination offers a more efficient architecture than many competing models, resulting in faster inference without sacrificing accuracy.

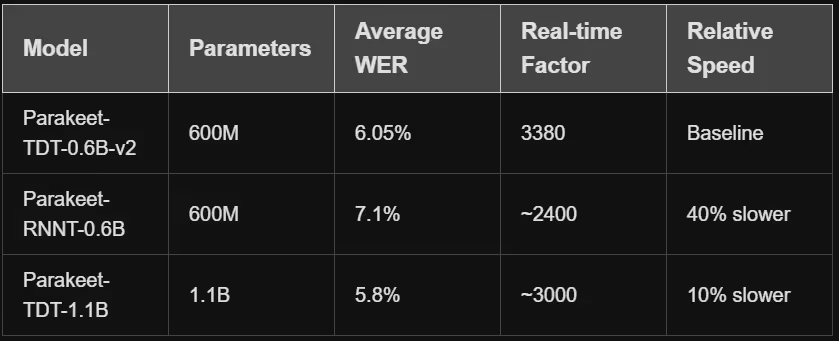

vs. Other NVIDIA ASR models

- Size vs. performance: Compared to its larger sibling, Parakeet-TDT-1.1B, the 0.6B version offers comparable performance with fewer resources.

- Decoder improvements: The TDT decoder offers advantages over previous CTC and RNNT decoders used in other NVIDIA models, particularly in terms of speed and timestamp accuracy.

Other commercial solutions

When compared to commercial ASR solutions like Google Speech-to-Text, Amazon Transcribe, and Microsoft Azure Speech, Parakeet-TDT-0.6B-v2 offers several advantages:

- Local deployment: Unlike cloud-based services, Parakeet can be deployed locally, eliminating privacy concerns and internet dependency.

- One-time cost: There are no ongoing usage fees or API call charges.

- Customisation potential: As an open model, Parakeet can be fine-tuned for specific domains or use cases.

However, commercial solutions may offer additional features like speaker diarization, enhanced multilingual support, or tighter integration with their respective cloud ecosystems.

How to use Parakeet-TDT-0.6B-V2

The model is available through NVIDIA’s NeMo toolkit and can be easily integrated into applications for inference or fine-tuning. Here’s a simple guide to get started:

Installation and setup

First, you’ll need to install the NeMo toolkit and its ASR components:

pip install -U nemo_toolkit['asr']

Basic transcription

To transcribe an audio file, you can use the following Python code:

import nemo.collections.asr as nemo_asr

# Load the model

asr_model = nemo_asr.models.ASRModel.from_pretrained(model_name="nvidia/parakeet-tdt-0.6b-v2")

# Download a sample audio file

# wget https://dldata-public.s3.us-east-2.amazonaws.com/2086-149220-0033.wav

# Transcribe the audio

output = asr_model.transcribe(['2086-149220-0033.wav'])

print(output[0].text)

Transcription with timestamps

If you need timestamp information along with the transcription:

output = asr_model.transcribe(['2086-149220-0033.wav'], timestamps=True)

# Access word-level timestamps

word_timestamps = output[0].timestamp['word']

# Access segment-level timestamps

segment_timestamps = output[0].timestamp['segment']

# Access character-level timestamps

char_timestamps = output[0].timestamp['char']

# Print segment timestamps

for stamp in segment_timestamps:

print(f"{stamp['start']}s - {stamp['end']}s : {stamp['segment']}")

Building a web UI with Gradio

You can also create a simple web interface for transcription using Gradio:

import gradio as gr

import nemo.collections.asr as nemo_asr

# Load model once

asr_model = nemo_asr.models.ASRModel.from_pretrained(model_name="nvidia/parakeet-tdt-0.6b-v2")

def transcribe_audio(audio, timestamps=False):

if audio is None:

return "Please upload a valid audio file."

output = asr_model.transcribe([audio], timestamps=timestamps)

if timestamps:

segments = output[0].timestamp['segment']

result = ""

for seg in segments:

result += f"{seg['start']}s - {seg['end']}s: {seg['segment']}\n"

return result

else:

return output[0].text

# Build the UI

with gr.Blocks() as demo:

gr.Markdown("# Parakeet-TDT-0.6B Speech to Text Demo")

with gr.Row():

audio_input = gr.Audio(type="filepath", label="Upload Audio (16kHz .wav preferred)")

with gr.Row():

timestamp_checkbox = gr.Checkbox(label="Enable Timestamps", value=False)

with gr.Row():

output_text = gr.Textbox(label="Transcription Output", lines=10)

submit_btn = gr.Button("Transcribe")

submit_btn.click(fn=transcribe_audio, inputs=[audio_input, timestamp_checkbox], outputs=output_text)

# Launch the UI

demo.launch(server_name="0.0.0.0", server_port=7860)

Setting Up a local environment

For those who want to run the model locally, here are the key requirements:

Minimum hardware requirements

- GPU: NVIDIA T4 (16 GB VRAM) or better

- vCPUs: 8+

- RAM: 16 GB

- Disk: 30–40 GB

- Works for shorter audio (<10 mins) and lower concurrency

Recommended hardware

- GPU: NVIDIA A6000, A100, or H100 for optimal performance

- RAM: 32+ GB for handling longer audio and larger batch sizes

Step-by-step installation (Ubuntu/Linux)

Install Python and dependencies:

sudo apt update

sudo apt install -y build-essential git wget curl ffmpeg

sudo apt install -y python3-pip

- Set up a virtual environment (optional but recommended):

python3 -m venv nemo_env

source nemo_env/bin/activate

- Install PyTorch with CUDA support:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu11

Install NeMo toolkit with ASR support:

pip install -U nemo_toolkit['asr']

Test the installation with a basic transcription:

import nemo.collections.asr as nemo_asr asr_model = nemo_asr.models.ASRModel.from_pretrained(model_name="nvidia/parakeet-tdt-0.6b-v2")

# Now you're ready to use the modCopyimport nemo.collections.asr as nemo_asr asr_model = nemo_asr.models.ASRModel.from_pretrained(model_name="nvidia/parakeet-tdt-0.6b-v2")

# Now you're ready to use the model

Technical deep dive: training details

The Parakeet-TDT-0.6B-V2 model was trained using a sophisticated approach to achieve its high performance:

Training process

- Initial checkpoint: Started with a wav2vec SSL checkpoint pretrained on the LibriLight dataset

- Training scale: Trained for 150,000 steps on 128 A100 GPUs

- Dataset balancing: Used temperature sampling with a value of 0.5 across multiple corpora

- Fine-tuning: Stage 2 fine-tuning was performed for 2,500 steps on 4 A100 GPUs using approximately 500 hours of high-quality, human-transcribed data

Training dataset

The model was trained on the Granary dataset, comprising approximately 120,000 hours of English speech data:

- 10,000 hours from human-transcribed NeMo ASR Set 3.0

- 110,000 hours of pseudo-labelled data from the YTC (YouTube-Commons) dataset, the YODAS dataset, and the Librilight

This diverse dataset ensures robust performance across various domains, accents, and recording conditions.

Best practices and optimisation tips

To get the most out of Parakeet-TDT-0.6B-v2, consider these optimisation strategies:

- Batch processing: For transcribing multiple audio files, use batching to leverage the model’s excellent batch processing capabilities.

- Audio preprocessing:

- Ensure audio is sampled at 16kHz (mono-channel)

- For optimal results, normalize audio levels

- Consider applying noise reduction for recordings in noisy environments

- GPU optimisation:

- Use mixed precision (FP16) for faster inference

- Consider a GPU with Tensor Cores for optimal performance

- Monitor VRAM usage to optimise batch sizes

2. Long audio handling:

- For files longer than 24 minutes, split into chunks with small overlaps

- Process chunks in parallel when possible

- Merge the resulting transcriptions with timestamp alignment

Applications and use cases

The exceptional performance and efficiency of Parakeet-TDT-0.6B-v2 make it suitable for a wide range of applications:

Content creation and media

- Video subtitling: Generate accurate captions for videos with precise word-level timestamps

- Podcast transcription: Convert audio podcasts to searchable text

- Media archives: Enable search and discovery in large audio/video repositories

Business and enterprise

- Meeting transcription: Capture discussions with accurate speaker attribution

- Customer service: Analyse call centre interactions for quality assurance

- Sales intelligence: Extract insights from sales calls and demos

Accessibility

- Closed captioning: Provide real-time captions for live events

- Assistive technology: Support individuals with hearing impairments

- Educational tools: Create text versions of lectures and presentations

Research and development

- Speech data analysis: Process large speech corpora efficiently

- Foundation for NLP: Provide accurate transcripts for subsequent natural language processing

- Custom ASR development: Use as a base model for fine-tuning to specific domains

Limitations and considerations

While Parakeet-TDT-0.6B-v2 offers impressive capabilities, it’s important to be aware of its limitations:

- English-only support: The model is trained specifically for English and doesn’t support other languages.

- Accuracy variations: Performance may vary based on factors such as:

- Accents and dialectal variations

- Domain-specific terminology

- Background noise and recording quality

- Speaker clarity and speech patterns

3. GPU dependency: The model is optimized for NVIDIA GPUs and requires appropriate hardware for optimal performance.

- Licensing: Usage is governed by the CC-BY-4.0 license, which has certain requirements for attribution and sharing.

Future directions

The innovations demonstrated in Parakeet-TDT-0.6B-v2 point to several exciting future directions for speech recognition technology:

- Multilingual support: Extending the TDT architecture to support multiple languages while maintaining efficiency.

- Model distillation: Further reducing model size while preserving performance through knowledge distillation techniques.

- Multimodal integration: Combining audio and visual cues for enhanced recognition in challenging environments.

- Domain adaptation: Simplified fine-tuning processes for specialised domains like medical, legal, or technical fields.

- Enhanced contextual understanding: Improved handling of contextual cues for better disambiguation and semantic interpretation.

Hugging face space: try the space here

Conclusion

NVIDIA’s Parakeet-TDT-0.6B-v2 sets a new standard in speech recognition by proving that smart architecture can outperform sheer model size. With its FastConformer encoder and Token-and-Duration Transducer decoder, it delivers exceptional accuracy, speed, and efficiency-all in a compact 600-million-parameter model.

For teams building real-world applications, it offers the best of both worlds: cutting-edge transcription quality and practical deployment, without the heavy hardware demands of larger models. Backed by the open-source NeMo toolkit, it’s easy to integrate, fine-tune, and scale across use cases ranging from media and accessibility to research and enterprise tools.

As the field moves forward, Parakeet-TDT-0.6B-v2 illustrates the future of speech recognition: purpose-built models that prioritize performance, precision, and usability over size alone.

References

- NVIDIA Parakeet-TDT-0.6B-V2 Model Card

- Fast Conformer with Linearly Scalable Attention for Efficient Speech Recognition

- Efficient Sequence Transduction by Jointly Predicting Tokens and Durations

- NVIDIA NeMo Framework Documentation

- How to Install NVIDIA Parakeet-TDT-0.6B-V2 Locally

- https://www.youtube.com/live/S19cWj6dkEI

- https://www.youtube.com/watch?time_continue=1&v=r2CrZQVtQpI&source_ve_path=Mjg2NjY

By combining the efficiency of the FastConformer architecture with the speed and accuracy benefits of the Token Duration Transducer, NVIDIA has created a truly exceptional ASR model that sets new standards for the industry while remaining accessible and practical for real-world applications.

For reference you can visit this : https://medium.com/@akshaychame2/nvidia-parakeet-tdt-0-6b-v2-a-deep-dive-into-state-of-the-art-speech-recognition-architecture-d1f0b8e61e4b