1. Introduction to LLM masking

Large Language Models (LLMs) like GPT-4, Claude, and BERT have transformed natural language processing applications, enabling sophisticated text generation, summarisation, and analysis capabilities. However, with this power comes significant responsibility, particularly regarding data privacy and security.

LLM masking refers to the process of identifying and hiding sensitive information like phone numbers, email addresses, credit card numbers, and personal names before sending text to Large Language Models. This ensures privacy, security, and compliance with data protection laws like GDPR, HIPAA, and CCPA.

LLM masking is a technique that identifies and replaces sensitive information with placeholder tokens before processing text with Large Language Models, and then reintroduces the original data afterwards if needed.

This blog offers a comprehensive guide to understanding and implementing LLM Masking techniques in your AI applications, featuring code examples, diagrams, and best practices to help you protect sensitive information while harnessing the power of LLMs.

2. Why LLM masking is important

LLM masking is not just a technical nicety- it's often a legal and ethical requirement. Here's why it matters:

- Data Privacy: Prevent personally identifiable information (PII) from being exposed to third-party LLM services

- Legal Compliance: Meet regulatory requirements like GDPR, HIPAA, and CCPA

- Reduced Risk: Minimise the chance of data breaches or unauthorised access to sensitive information

- Ethical AI Use: Respect user privacy and build trust in AI systems

- Model Training Protection: Prevent sensitive data from being incorporated into future model training

The Risks of Unmasked Data

LLMs can memorise parts of their training data and potentially reveal sensitive information in responses. Additionally, most major LLM providers retain user prompts, which could expose sensitive data if not properly masked before submission.

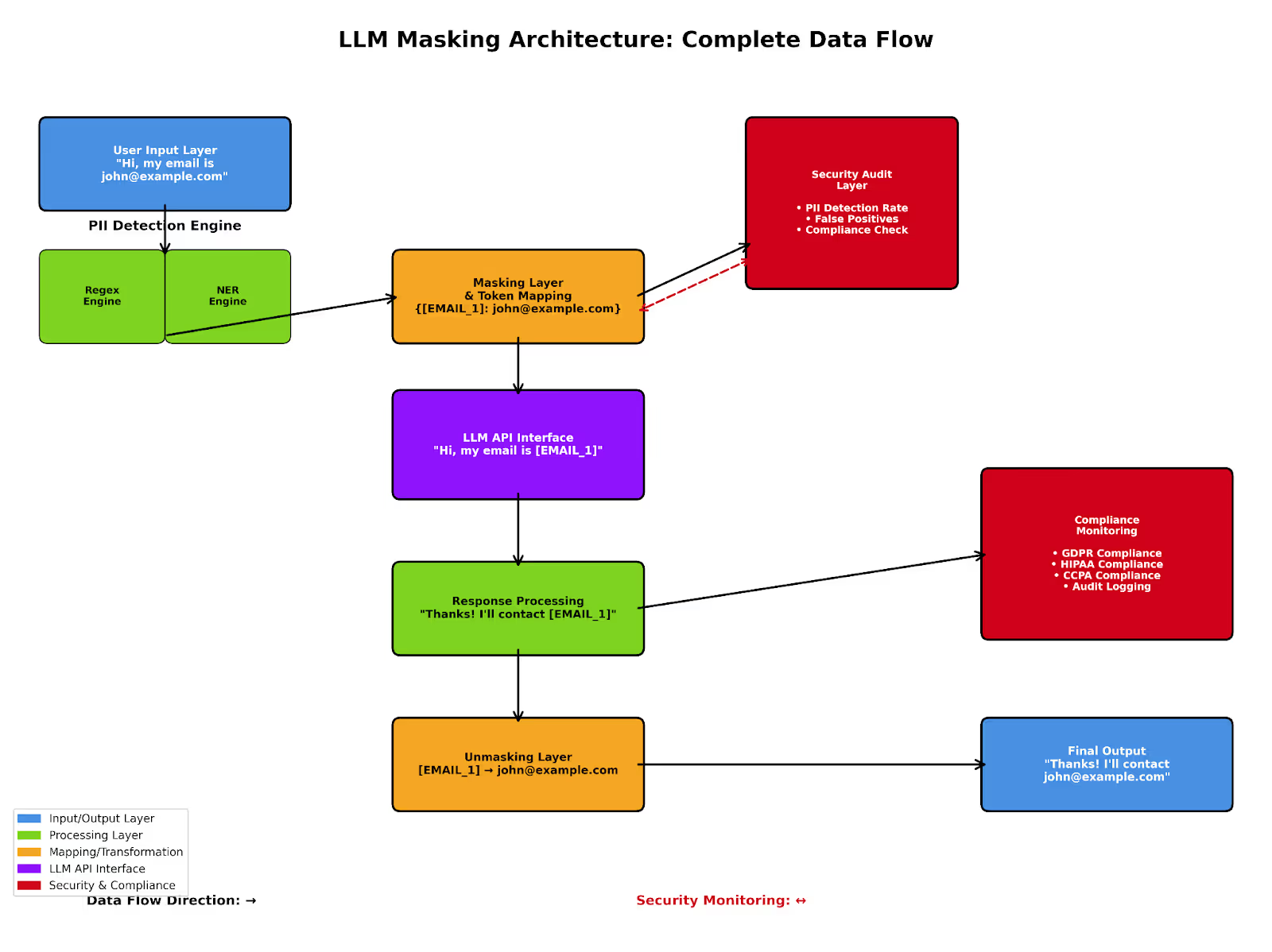

3. How LLM masking works

LLM masking follows a three-step process:

- Detection: Identify sensitive information in input text

- Masking: Replace sensitive content with placeholder tokens

- Restoration (if needed): Reintroduce the original data in the output

This process ensures that sensitive information never leaves your system while still allowing the LLM to process the non-sensitive parts of the text effectively.

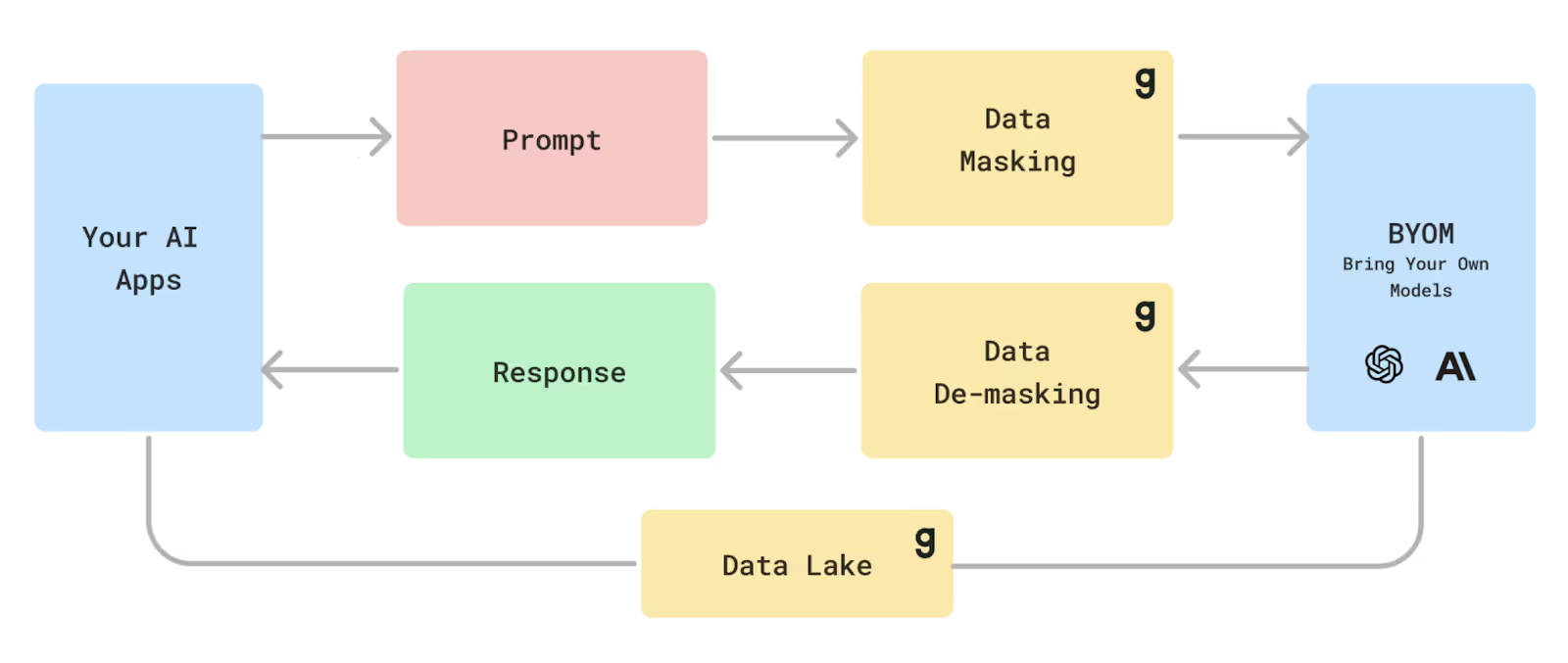

Main LLM Masking Architecture

LLM Masking Architecture

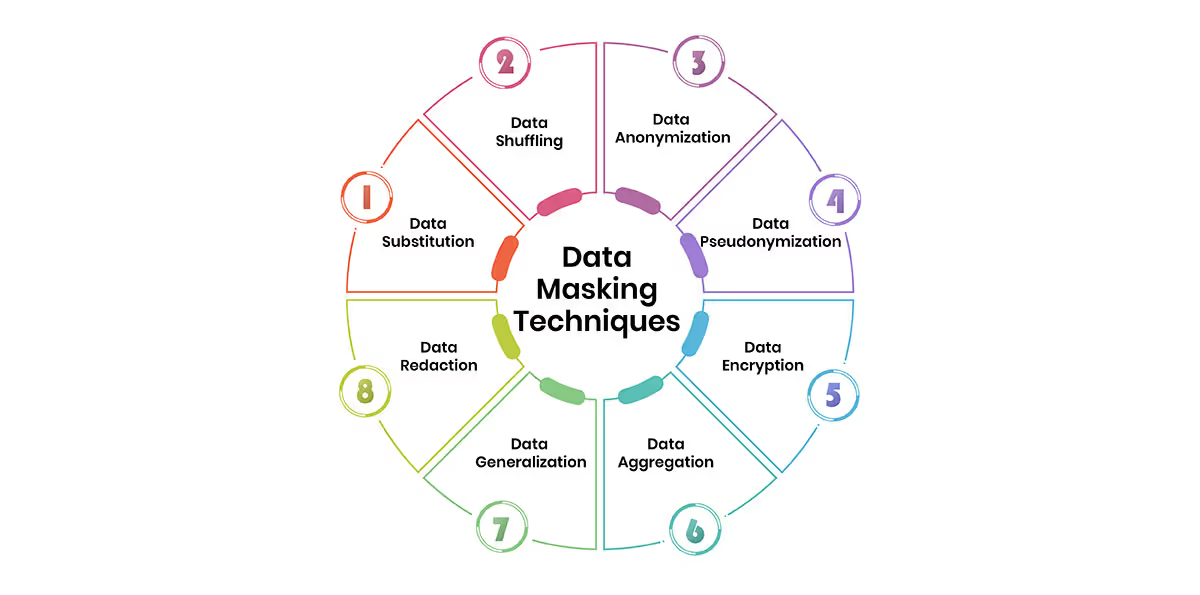

4. Common techniques for LLM masking

Several approaches can be used to implement LLM masking, each with its own strengths and weaknesses.

4.1 Regex-based approach

Regular expressions (regex) provide a straightforward method for identifying structured data patterns like email addresses, phone numbers, and credit card numbers.

Pros: Fast, lightweight, easy to implement, no external dependencies

Cons: May miss complex patterns or context-dependent PII, can produce false positives

Here are some common regex patterns used for identifying PII:

# Email addresses

email_pattern = r’[a-zA-Z0–9._%+-]+@[a-zA-Z0–9.-]+\.[a-zA-Z]{2,}’

# US phone numbers

phone_pattern = r’\b(\+\d{1,2}\s)?\(?\d{3}\)?[\s.-]\d{3}[\s.-]\d{4}\b’

# Credit card numbers

cc_pattern = r’\b(?:\d{4}[-\s]?){3}\d{4}\b’

# Social Security Numbers (US)

ssn_pattern = r’\b\d{3}[-\s]?\d{2}[-\s]?\d{4}\b’

4.2 Named Entity Recognition (NER)

Named Entity Recognition uses machine learning models to identify entities like names, organisations, locations, and other context-dependent information that might be difficult to capture with regex alone.

Pros: Better at identifying context-dependent PII, can recognise names and entities not follow specific patterns

Cons: Computationally more expensive, requires ML models, may still miss some PII types

Popular NER libraries and models include:

- SpaCy - A powerful Python NLP library with pre-trained NER models

- Hugging Face's Transformers - Provides state-of-the-art transformer-based models for NER

- Stanford NER - Java-based NER system with pre-trained models

- Custom models fine-tuned on specific domains (healthcare, legal, financial)

4.3 Hybrid approaches

Most effective LLM Masking implementations use a combination of regex and NER techniques to maximise coverage and accuracy.

Best Practice: Use regex for well-structured PII (email addresses, phone numbers) and NER for context-dependent PII (names, locations, organisations).

Some systems also employ additional techniques:

- Pattern-based data dictionaries: For identifying domain-specific sensitive information

- Contextual analysis: To reduce false positives by considering the surrounding text

- Custom classifiers: For specific types of sensitive data not covered by standard PII categories

5. Implementation examples

Let's explore practical implementations of LLM masking using different approaches.

5.1 Python code with Regex

Here's a simple implementation of regex-based PII detection and masking in Python:

import re

def mask_pii(text):

# Define regex patterns for different types of PII

patterns = {

"EMAIL": r'[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}',

"PHONE": r'\b(\+\d{1,2}\s)?\(?\d{3}\)?[\s.-]\d{3}[\s.-]\d{4}\b',

"SSN": r'\b\d{3}[-\s]?\d{2}[-\s]?\d{4}\b',

"CREDIT_CARD": r'\b(?:\d{4}[-\s]?){3}\d{4}\b',

"IP_ADDRESS": r'\b\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}\b'

}

# Create a dictionary to store masked values for restoration

masked_values = {}

masked_text = text

# Apply masking for each pattern

for pii_type, pattern in patterns.items():

matches = re.finditer(pattern, masked_text)

# Process matches in reverse to avoid offset issues when replacing

matches = list(matches)

for i, match in enumerate(reversed(matches)):

original_value = match.group(0)

mask_token = f"[{pii_type}_{i+1}]"

# Store the original value for restoration

masked_values[mask_token] = original_value

# Replace the value in the text

start, end = match.span()

masked_text = masked_text[:start] + mask_token + masked_text[end:]

return masked_text, masked_values

def unmask_pii(masked_text, masked_values):

"""Restore the original values from masked text"""

restored_text = masked_text

for mask_token, original_value in masked_values.items():

restored_text = restored_text.replace(mask_token, original_value)

return restored_text

# Example usage

text = """Hello, my name is John Smith. You can reach me at john.smith@example.com

or call me at (123) 456-7890. My credit card number is 4111-1111-1111-1111 and

my social security number is 123-45-6789."""

masked_text, masked_values = mask_pii(text)

print("Original text:")

print(text)

print("\nMasked text:")

print(masked_text)

# Assuming this is the response from an LLM

llm_response = f"I've noted your contact info: {masked_values.get('[EMAIL_1]', '[EMAIL_1]')} and {masked_values.get('[PHONE_1]', '[PHONE_1]')}"

# Unmask the response

unmasked_response = unmask_pii(llm_response, masked_values)

print("\nLLM response (unmasked):")

print(unmasked_response)

This example demonstrates a simple approach to masking and unmasking PII in text using regex patterns.

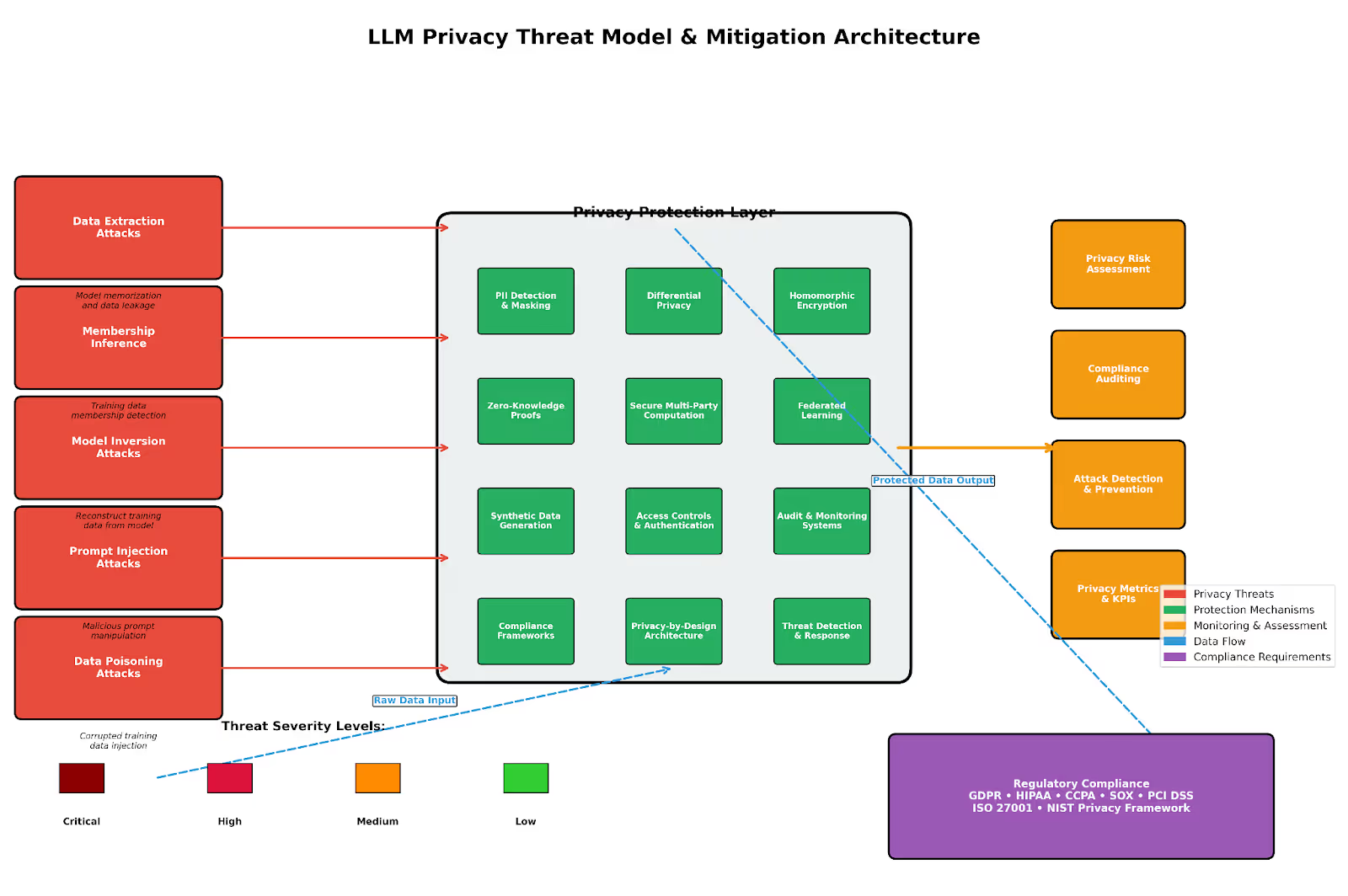

Privacy threat model architecture

5.2 Using specialised libraries

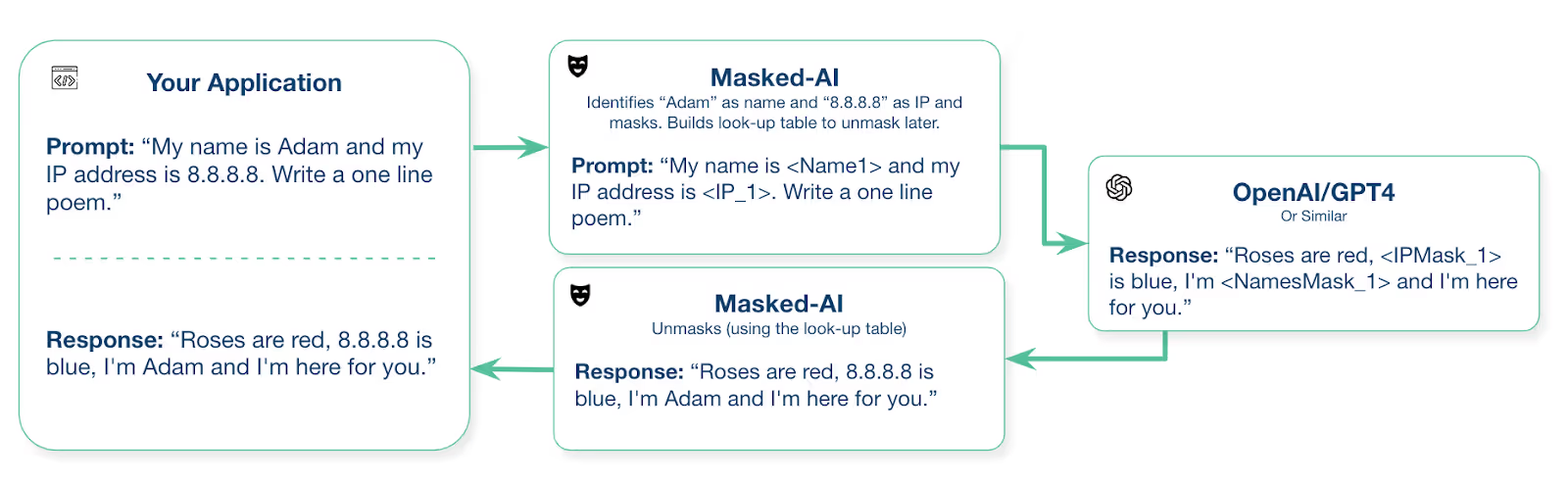

Several specialised libraries make LLM Masking more robust and easier to implement. One such library is Masked-AI.

import os

import openai

from masked_ai import Masker

# Load your API key from an environment variable

openai.api_key = os.getenv("OPENAI_API_KEY")

# Text containing sensitive information

data = "My name is Adam and my IP address is 8.8.8.8. Now, write a one line poem:"

# Create a masker instance

masker = Masker(data)

print('Masked: ', masker.masked_data)

# Send the masked data to the LLM

response = openai.Completion.create(

model="text-davinci-003",

prompt=masker.masked_data,

temperature=0,

max_tokens=1000,

)

# Get the generated text

generated_text = response.choices[0].text

print('Raw response: ', response)

# Unmask the response

unmasked = masker.unmask_data(generated_text)

print('Result:', unmasked)

Other useful libraries for PII detection and masking include:

- PiiRegex - A Python library with predefined regex patterns for PII detection

- Microsoft Presidio - An open-source framework for PII anonymisation and de-identification

- AWS Comprehend - A managed service for detecting PII entities

- spaCy-based NER - For more context-aware PII detection

Here's an example using PiiRegex:

from piiregex import PiiRegex

def mask_with_piiregex(text):

# Initialize the PiiRegex parser

parser = PiiRegex()

# Create a dictionary to store originals

masked_values = {}

masked_text = text

# Find and mask emails

emails = parser.emails(text)

for i, email in enumerate(emails):

mask_token = f"[EMAIL_{i+1}]"

masked_values[mask_token] = email

masked_text = masked_text.replace(email, mask_token)

# Find and mask phone numbers

phones = parser.phones(text)

for i, phone in enumerate(phones):

mask_token = f"[PHONE_{i+1}]"

masked_values[mask_token] = phone

masked_text = masked_text.replace(phone, mask_token)

# Find and mask credit cards

credit_cards = parser.credit_cards(text)

for i, cc in enumerate(credit_cards):

mask_token = f"[CREDIT_CARD_{i+1}]"

masked_values[mask_token] = cc

masked_text = masked_text.replace(cc, mask_token)

return masked_text, masked_values

# Example usage

text = "Contact me at john.doe@example.com or 555-123-4567. My card: 4111-1111-1111-1111"

masked_text, masked_values = mask_with_piiregex(text)

print("Original:", text)

print("Masked:", masked_text)5.3 Integration with LLM APIs

When integrating LLM masking with LLM APIs, it's important to have a robust pipeline that handles the masking and unmasking process efficiently. Here's an example of how to integrate with OpenAI's API:

import re

import os

import json

import requests

from typing import Dict, List, Tuple

class LLMMaskingPipeline:

def __init__(self):

self.api_key = os.getenv("OPENAI_API_KEY")

self.api_url = "https://api.openai.com/v1/chat/completions"

# Define regex patterns for PII detection

self.patterns = {

"EMAIL": r'[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}',

"PHONE": r'\b(\+\d{1,2}\s)?\(?\d{3}\)?[\s.-]\d{3}[\s.-]\d{4}\b',

"SSN": r'\b\d{3}[-\s]?\d{2}[-\s]?\d{4}\b',

"CREDIT_CARD": r'\b(?:\d{4}[-\s]?){3}\d{4}\b',

"IP_ADDRESS": r'\b\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}\b'

}

def detect_and_mask(self, text: str) -> Tuple[str, Dict[str, str]]:

"""Detect and mask PII in text"""

masked_values = {}

masked_text = text

for pii_type, pattern in self.patterns.items():

matches = list(re.finditer(pattern, masked_text))

# Process matches in reverse to avoid offset issues

for i, match in enumerate(reversed(matches)):

original = match.group(0)

mask_token = f"[{pii_type}_{i+1}]"

# Store for restoration

masked_values[mask_token] = original

# Replace in text

start, end = match.span()

masked_text = masked_text[:start] + mask_token + masked_text[end:]

return masked_text, masked_values

def unmask(self, text: str, masked_values: Dict[str, str]) -> str:

"""Restore masked values in text"""

for token, original in masked_values.items():

text = text.replace(token, original)

return text

def query_llm(self, prompt: str) -> str:

"""Send a prompt to OpenAI and get response"""

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {self.api_key}"

}

data = {

"model": "gpt-3.5-turbo",

"messages": [

{"role": "user", "content": prompt}

],

"temperature": 0.7

}

response = requests.post(self.api_url, headers=headers, json=data)

response_json = response.json()

if "choices" in response_json and len(response_json["choices"]) > 0:

return response_json["choices"][0]["message"]["content"]

return "Error: Failed to get a valid response from the LLM."

def process_with_masking(self, text: str) -> str:

"""Process text with PII masking"""

# Step 1: Mask PII

masked_text, masked_values = self.detect_and_mask(text)

print(f"Masked Text: {masked_text}")

# Step 2: Send to LLM

llm_response = self.query_llm(masked_text)

print(f"Raw LLM Response: {llm_response}")

# Step 3: Unmask response

unmasked_response = self.unmask(llm_response, masked_values)

return unmasked_response

# Example usage

if __name__ == "__main__":

pipeline = LLMMaskingPipeline()

user_text = """Hi, my name is Sarah Johnson. My email is sarah.j@example.com

and my phone number is (555) 123-4567. Can you help me write a short

poem about privacy?"""

response = pipeline.process_with_masking(user_text)

print("\nFinal Response (with PII restored if necessary):")

print(response)

This example demonstrates a complete pipeline for masking sensitive information before sending text to an LLM and then restoring it in the response if needed.

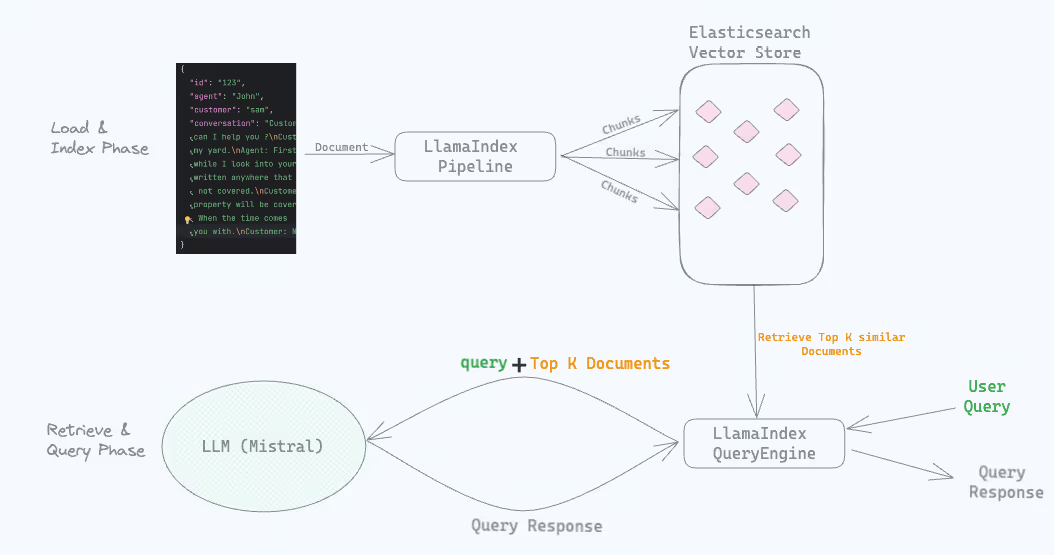

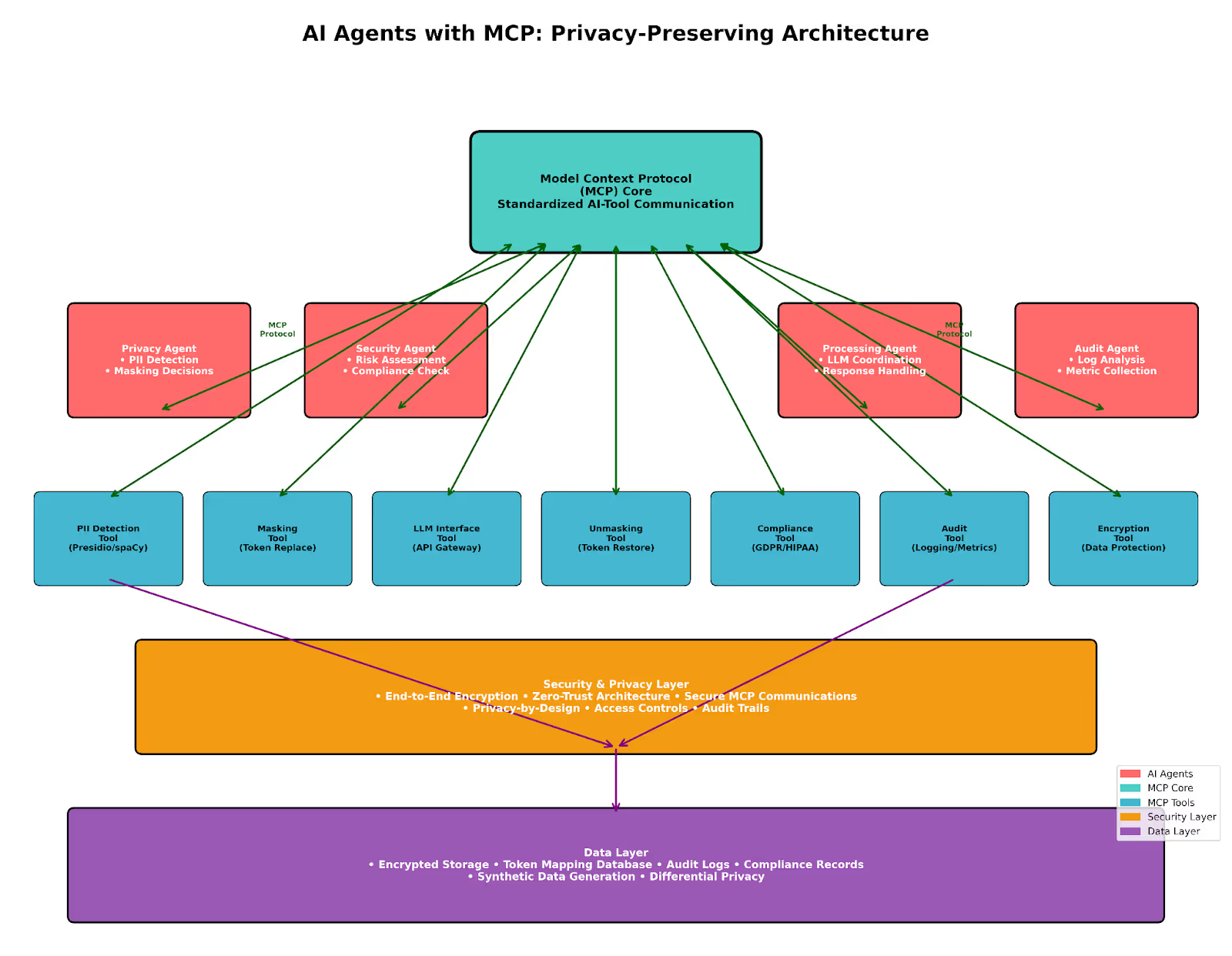

AI Agents with MCP Integration

AI Agents MCP Architecture

6. Best practices and considerations

When implementing LLM masking in your applications, consider these best practices:

Technical best practices

- Use a combination of regex and NER for more comprehensive coverage

- Test your masking implementation with diverse datasets

- Regularly update your patterns and models to cover new PII types

- Implement logging and monitoring to track masking effectiveness

- Consider the performance impact of complex masking on real-time applications

Legal and compliance considerations

- Understand your regulatory requirements (GDPR, HIPAA, CCPA, etc.)

- Document your masking processes for compliance audits

- Consider having a legal review of your masking implementation

- Be aware that some jurisdictions have specific requirements for handling sensitive data

Common pitfalls to avoid:

- Over-reliance on regex: Regex alone might miss complex or context-dependent PII

- Not handling edge cases: Different formats of the same PII type can be missed

- Ignoring international formats: PII formats vary by country (phone numbers, addresses, etc.)

- Inadequate testing: Test with real-world data to ensure effectiveness

- False positives: Overly aggressive masking can mask non-PII data

Advanced Privacy-Preserving Techniques

Differential Privacy for LLMs

import torch

from opacus import PrivacyEngine

def train_with_differential_privacy(model, dataloader, privacy_budget=1.0):

privacy_engine = PrivacyEngine()

model, optimizer, dataloader = privacy_engine.make_private_with_epsilon(

module=model,

optimizer=optimizer,

data_loader=dataloader,

epochs=epochs,

target_epsilon=privacy_budget,

target_delta=1e-5,

max_grad_norm=1.0,

)

return model

Differential privacy adds calibrated noise to training data or model outputs to provide mathematical guarantees about privacy protection. This technique is particularly useful when training LLMs on sensitive datasets. Google Research

7. Conclusion

LLM masking is a critical technique for protecting sensitive information when using Large Language Models.

By identifying and replacing PII with placeholder tokens before sending text to LLMs, you can maintain privacy and security while still leveraging the power of these AI systems.

In this guide, we've covered:

- The importance of LLM masking for privacy, security, and compliance

- How LLM masking works through detection, masking, and restoration

- Different techniques, including regex, NER, and hybrid approaches

- Practical implementation examples in Python

- Integration strategies with LLM APIs

- Best practices and considerations for effective masking

As AI systems become more integrated into critical applications, protecting sensitive information will only grow in importance. By implementing robust LLM Masking, you can ensure that your applications provide powerful AI capabilities without compromising user privacy or violating regulatory requirements.

Final Reminder

No masking system is perfect. Always design your systems with defence in depth and implement additional safeguards beyond masking alone. Regularly test and update your masking implementation to ensure it remains effective against evolving PII patterns and formats.

.avif)